(This post is about how I created a discord bot that calculates a wordle leaderboard from messages posted by the official NYT wordle discord bot. A chunk of the post is just explaining how the rankings are calculated, since sometimes it produces some interesting results. If you just want the bot, the link is here)

A few months ago The New York Times introduced a discord bot where you can play Wordle. This is, frankly, a great idea. The initial success of wordle was, in my opinion, because it was naturally something you would talk about with friends, and then those friends would try it if the hadn’t heard of it. This was because there was only one wordle a day, “have you done todays wordle?” is something I’ve found myself saying both to those who I know play it and those who may not have ever heard of it. This isn’t unique to wordle, like, there are daily-only crosswords, but what usually happens when such a game goes online is that it allows you to play again and again and again. “Here’s 1000 different crosswords! With themes and difficulty levels! Please stay on our site and see all our beautiful ads!”. So wordle was new, fun, short (faster than Sudoku or a crossword), and had a natural transmission method. If you still doubt the popularity of wordle, just google it and look around on the results page.

So creating a discord bot was an obviously good idea. Now you could play the game “with” your friends. You implicitly remind eachother to play from the message pings of others playing, and if you miss those, you’ll probably wake up to an @mention from the wordle bot, as it summarises yesterday’s scores and presents a winner, just in time for you to play today’s wordle. I found myself playing the wordle in bed in the mornings, just to wake myself up.

What it didn’t really have was any good way to compare yourself to others. Sure, you could see each day’s results, but nothing else. Perhaps the most obvious metric is average score, just take every days score, 1-6, sum them up, and divide by the number of days. Sadly, this isn’t available with the NYT discord bot??? Instead, I’m greeted with:

So you’re telling me that now that I’ve played a few dozen days, and am actually interested in my average, you want me to play another few dozen on your website (because ofc the data isn’t transferrable that would be too convenient) to get a score, and now I can’t play it on discord with my friends anymore??

Nah. Playing on discord is great and I want to keep doing that. I can solve this problem for them, all the data is right there. Each day, there’s that summary I mentioned. It looks like this:

At first, I definitely did not use discord chat exporter because that would be against discord’s ToS. No, I of course manually copy pasted all those messages into a nice json file that I could then read in python and calculate some stats. Yep, definitely. Anyways, I used this data to do all the further developments of the wordle leaderboard, and then later I wrapped it all up in a discord bot which I’ve shared the link to at the bottom of this post. But imagine this is all for the bot since it all works the same way.

Here’s the first problem with just using average scores to calculate a leaderboard:

1. Some people don’t play much

Ok here’s an example leaderboard:

- @busyperson: 3 games, 3.66 average

- @poorsoul: 115 games, 4.03 average

Well ok you got a 3 one time and two 4s, does that really mean you deserve the top spot? We could just filter out people with, idk, less than 5 games, but “you had a lucky streak” is going to feel like a problem for a while. So what to do? The problem we face is we don’t have enough data. Maybe @busyperson really is that good, maybe they got lucky, 3 games is just not enough to know. Reasonably, we can manage this uncertainty by estimating how well the average player performs, and making it so that people with fewer games are somehow dragged towards this average. This is called shrinkage, and we can apply it to our results. An added bonus is this is symmetrical, so some unlucky person who only played three games and got only 5s will have their score improved.

Let’s say that the average average score is 4, and that the variance of the average player scores is 0.1. Then, we would calculate the @busyperson’s adjusted score like this

shrinkageFactor = 0.1 / (0.1 + 0.33/3) = 0.48

finalScore = 0.48 × 3.67 + (1 – 0.48) × 4.0 = 1.76 + 2.08 = 3.84

where 0.33 is the variance of the scores 3, 4, 4.

Essentially we calculate how much of the data we use from the players actual average, and how much we just use the population average. This drags that player towards the average of 4.

2. “I didn’t finish the wordle”

So, what happens if you don’t manage to get the wordle word in six guesses? Well, according to the wordle bot, you get X/6:

So, what happens if you start playing the wordle, make a couple guesses, and then decide you don’t want to finish it?

You also get X/6

This is a problem. It would make sense that for people who use up all six guesses and still don’t get the answer, you might give them a score of 7, dragging up their average significantly. But for those that just decided to quit, should we really penalise them so harshly? If we just ignore them, then there is a strategy whereby if you’ve used say, 4 guesses, and not succeeded, you could just not finish the game that day to “protect” your average. I couldn’t come up with a good answer for this. so the bot supports both treating X as 7 or ignoring those games entirely.

3. Some days are harder than others

Ever had a wordle game like this?

It’s one where even if you find quite a few correct letters, there’s still so many possibilities that finding the correct one is really hard. There are some strategies to deal with this, like recognizing that the number of options is high and using a word that isn’t a possible answer, but eliminates a lot of other possibilities. Even so, it’s fair to say that some days are more difficult than others. what if, by luck (or observing other players results as they play!), some player avoids those hard days? Can we adjust for that?

This fix can be done quite easily. If we know the average score overall, then for a given day, if most people did worse than that, it’s a hard day, and if most did better, it’s an easy day. We can take the average score for the day, and subtract the overall average to get an adjustment number we can apply to every players score. If everyone plays every day, this does not do anything to the ranking, but if you skip hard days, everyone else’s score might be a bit better than yours.

Ideally for this to work well, you want a lot of players. If there are only two players on a day then the “day difficulty” probably isn’t very accurate. But it’s still an adjustment you might want to have so I included it in the bot.

4. You can’t trust timestamps

So how does the bot actually work? What it does is scan all the messages in a channel, find all the summary messages, and use the results to calculate a leaderboard. Thing is, it’s nice if we can get results from more than one channel (for example, if your server owner got so annoyed with you playing wordle every day in general and created a dedicated channel for it (this has happened multiple times in servers I’m in)). If you load and use results from multiple channels, you need to not duplicate results you’ve already seen and combine the summaries that are unique into one full dataset of results. For uniqueness you need some ID, and I thought timestamp date would be a good one. It’s not. Of course timestamps would fail me:

I don’t know what schedule the wordle bot uses for these summaries, maybe I could work it out, but trying to fudge it while still using timestamps seemed like hell. So I chose a different hell. Here’s an example of the images that come with each summary:

Enhance

Before you ask, yes, adding OCR (Optical Character Recognition) did slow down the process of scanning messages significantly. But it works reliably and gives me nice unique IDs I can use for all results, regardless of channel or server or timestamp, that can be saved to the DB.

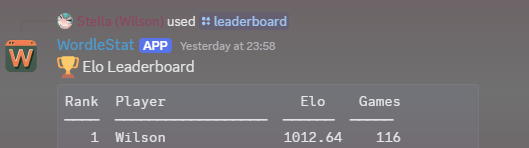

5. What if we use Elo Ratings

So far we’ve been calculating an average score and making some adjustments to that to create a leaderboard. But this isn’t the only way. If you’ve read some of this blog you should be aware of my fondness for Elo ratings, which you might know best as the chess rating system. Could we use that here?

Of course we can what kind of question is that!

Every summary, we pretend it’s actually n(n-1) pairwise games where n is the number of players that day. Your performance is compared to everyone else that day and your Elo is updated for each comparison. How does it work though?

Let’s say just two people play on a given day, and one gets 3/6 and the other gets 5/6. We could say person A wins, since they got a better score, and then the Elo update equation looks like this:

The players new rating is equal to their current one, plus K times the difference between their score and their expected score. K is just a value you can set. It’s sometimes 32 for e.g. chess, it defaults to 2 for the bot. The expected score E_A is calculated based on the two players ratings, you can see more details here, but essentially if your score was good (e.g 1), and your expected score was 0.5 (let’s say you have the same rating as the other player), then your rating will go up by K/2. it’s also symmetrical, so the other players rating will go down by K/2.

Here I said that we would use a score of 1 for a “win”, but the Elo update equation allows us to actually do better. Here, S_A is the score the player got in the game, (1 – the other players score) and of course we can use 1 for a win and 0 for a loss and 0.5 for a draw. However, for any pair of results the difference could range from 5 (or 6 if using X is seven) to -5 (or -6). If we could linearly space these, so 5 => 1, -5 => 0, 0 => 0.5, and then everything in between, we could have more granular information for the Elo update. This means if you get 2/6 and someone gets 6/6, you will “take” much more of their rating than if you get 3/6 and they get 4/6.

OK great, so each day you iteratively update the Elo’s and bam, you get a nice score. It naturally benefits players who play a lot (or put another way, doesn’t allow lucky players to get to the top in a couple games!), because if you want a high elo you will have to work your way up, just like in chess or other competitive ranked games. It also handles day difficulty nicely, because if everyone did poorly (or well) on a day, your ratings actually won’t change much, it’s only the relative difference between players that causes a rating change.

So we’ve found the best method yeah?

6. The MAP Elo rating

There’s one slight problem with the iterated Elo method and that is that it favors “newer” data. This isn’t actually a problem because people get better over time and we probably want their Elo to reflect their current skill, not necessarily the full average. Some people have a rough start, should we really penalize them for that?

Yes

……

Well ok, maybe not, but let’s at least consider one more possible way of creating this leaderboard.

Consider that we would like to use Elo ratings, but we don’t want to update it each game. We want an algorithm that considers all your results at the same time (removing the recency effect). To do this we recognise that when we compare two elo ratings, we get that estimated score E_A. What if we could work out all the elo ratings so that the difference between all the E_As and the actual results were as small as possible?

We totally can and this is called the Maximal Likelihood Estimate (MLE). i.e. we are maximising the likelhood that the estimated score E_A is equal to the actual results that person A got. There are algorithms we can use that, after certain number of rounds of calculation, will converge to a set of Elos for all players that minimizes the result/expected result difference.

There’s just one problem, what if someone plays once, against only one other person that day, and gets a perfect score? This is unlikely in this case of wordle, but it could happen, and essentially the MLE Elo rating for that lucky individual becomes infinity. I wrote about this problem in more detail in my post on ranking people with LLMs.

Look, we know nobody is infinitely good at anything, even the best players sometimes lose. If only there was some way to encode this prior knowledge about players into our algorithm for calculating their Elos with global result data…

The MAP (Maximum a Posteriori) estimate is a way to take a prior estimate of the distribution of player Elos and update them based on global result data. It’s not that different to the idea or the MLE, but it’s a tad more mathematically involved. You can read about it here, specifically equation 27.

Why use this? well, if you want to consider all data equally, and you have a lot of it, it can avoid problems where, say, you’ve had an unlucky streak of games the past 5 days. You can sortof achieve this in the iterated method by lowering K (making each step smaller), but the purest way is the MAP Elo.

How does the bot work though?

All of these methods and ideas I ended up including in the discord bot which you can add to your server with the link here. Because of the somewhat dirty method of collecting data it uses, it needs both the read message content and server members “privileged” intents (the latter I need because for some reason quite often the wordle bots @user links don’t convert properly to user IDs)

From there, you can use /sync to read channel messages, /leaderboard to generate a leaderboard, with all the settings I’ve talked about, and /personal_stats to see, well, personal stats. It looks like this:

The bot itself uses express, running on node in a docker container with a postgres db to store results.

Email me at wilson@wilsoniumite.com if you need any help!

Final thoughts

As I was working on this I was reminded of that quote “there are three kinds of lies: lies, damned lies, and statistics”. Each leaderboard, with different methods or settings, looks different. People can jump half a dozen places based on the settings used. None is “clearly” better than the other, and the underlying wordle game is of course easy to cheat at anyways. But nonetheless, I thought this was fun and the leaderboards are definitely nice when oneself is on the top :D.